实现音视频互动

本文介绍如何集成声网实时互动 SDK,通过少量代码从 0 开始实现一个简单的实时互动 App,适用于互动直播和视频通话场景。

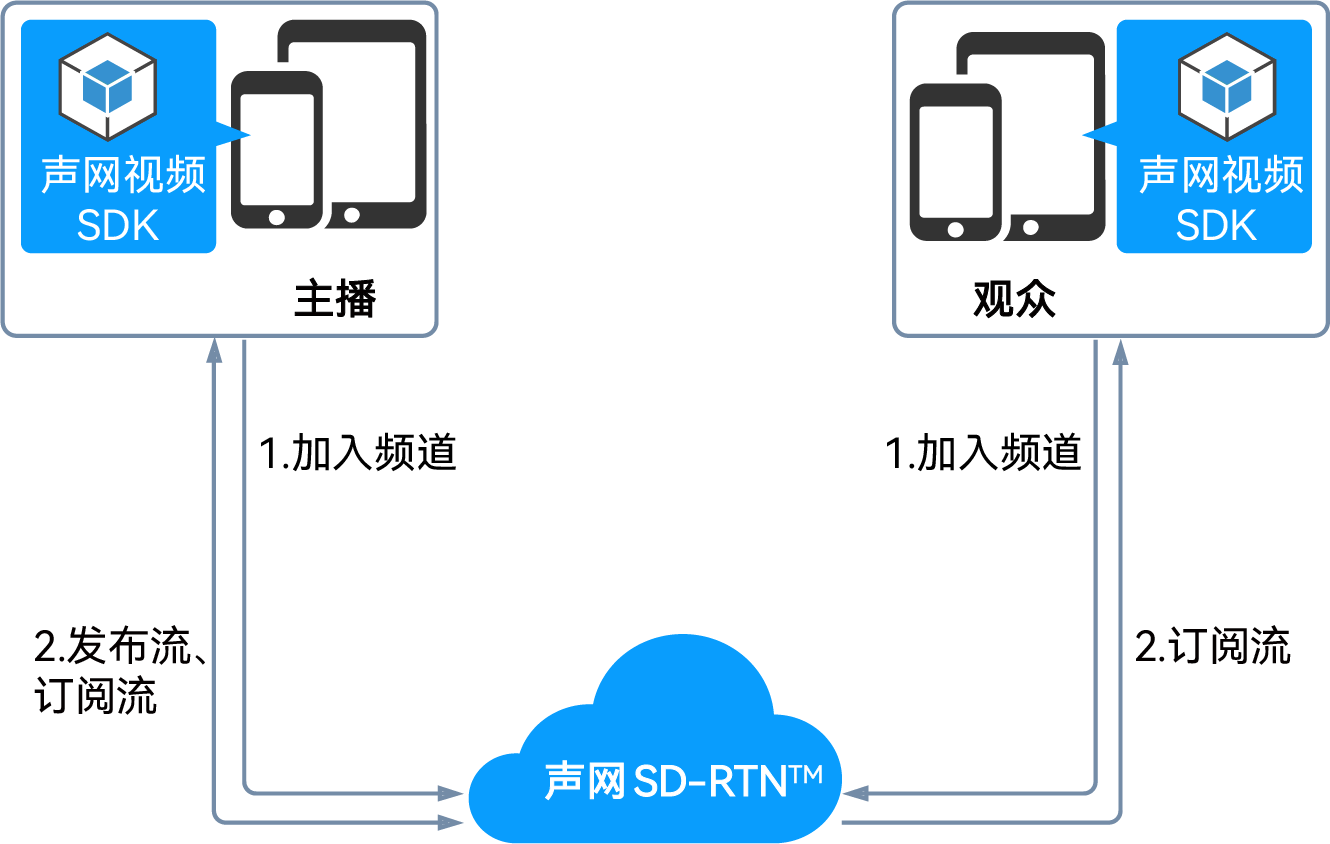

首先,你需要了解以下有关音视频实时互动的基础概念:

- 声网实时互动 SDK:由声网开发的、帮助开发者在 App 中实现实时音视频互动的 SDK。

- 频道:用于传输数据的通道,在同一个频道内的用户可以进行实时互动。

- 主播:可以在频道内发布音视频,同时也可以订阅其他主播发布的音视频。

- 观众:可以在频道内订阅音视频,不具备发布音视频权限。

- 互动直播:频道内的用户分为主播和观众,只有主播可以自由发言并且被其他用户看见。

- 视频通话:频道内的用户不区分主播和观众,所有用户都可以发言并看见彼此。

下图以互动直播场景为例,展示在 App 中实现音视频互动的基本工作流程:

前提条件

开始之前,请按照以下要求准备开发环境:

- Windows 或 macOS 计算机,需满足以下要求:

- 下载声网 Web SDK 支持的浏览器。声网强烈推荐使用最新稳定版 Google Chrome 浏览器。

- 具备物理音视频采集设备。

- 可连接到互联网。如果你的网络环境部署了防火墙,请参考应用企业防火墙限制以正常使用声网服务。

- 搭载 2.2 GHz Intel 第二代 i3/i5/i7 处理器或同等性能的其他处理器。

- 安装 Node.js 及 npm。

- 有效的声网账户和声网项目,请参考开通服务,从声网控制台获取以下信息:

- App ID:声网随机生成的字符串,用于识别你的 App。

- 临时 Token:你的 App 客户端加入频道时会使用 Token 对用户进行鉴权。临时 Token 的有效期为 24 小时。

- 频道名称:用于标识频道的字符串。

创建 Web 项目

创建一个名为 agora_web_quickstart 的文件夹。一个 Web 客户端项目至少需包含以下文件:

index.html: 用于设计 Web 应用的用户界面。basicEngagement.js: 通过AgoraRTCClient实现具体应用逻辑的代码。package.json: 安装并管理项目依赖。你可以通过命令行进入agora_web_quickstart目录并运行npm init命令来创建package.json文件。

集成 SDK

参考以下步骤通过 npm 将声网 Web SDK 集成到你的项目中:

-

在

package.json文件的dependencies字段中添加agora-rtc-sdk-ng及版本号:JSON{

"name": "agora_web_quickstart",

"version": "1.0.0",

"description": "",

"main": "basic.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"dependencies": {

"agora-rtc-sdk-ng": "latest"

},

"author": "",

"license": "ISC"

} -

将以下代码复制到

basicEngagement.js文件中,在你的项目中导入AgoraRTC模块。JavaScriptimport AgoraRTC from "agora-rtc-sdk-ng"

实现用户界面

将以下代码复制到 index.html 实现客户端用户界面:

- 互动直播

- 视频通话

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<title>Web SDK Video Quickstart</title>

<!--

This line is used to refer to the bundle.js file packaged by webpack. A sample webpack configuration is shown in the later step of running your app.

-->

<script src="./dist/bundle.js"></script>

</head>

<body>

<h2 class="left-align">Web SDK Video Quickstart</h2>

<div class="row">

<div>

<button type="button" id="host-join">Join as host</button>

<button type="button" id="audience-join">Join as audience</button>

<button type="button" id="leave">Leave</button>

</div>

</div>

</body>

</html>

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<title>Web SDK Video Quickstart</title>

<!--

This line is used to refer to the bundle.js file packaged by webpack. A sample webpack configuration is shown in the later step of running your app.

-->

<script src="./dist/bundle.js"></script>

</head>

<body>

<h2 class="left-align">Web SDK Video Quickstart</h2>

<div class="row">

<div>

<button type="button" id="join">JOIN</button>

<button type="button" id="leave">LEAVE</button>

</div>

</div>

</body>

</html>

实现流程

本节介绍如何根据你的应用场景来实现不同的音视频互动场景。你可以先复制完整的示例代码到你的项目中,快速体验实时音视频互动的基础功能,再按照实现步骤了解核心 API 调用。

- 互动直播

- 视频通话

下图展示了基础的音视频直播的 API 调用。注意图中的方法是对不同的对象调用的。

你可以直接将以下代码复制到 basicEngagement.js 文件中来快速体验互动直播,注意将 appID 和 token 替换为你自己的 App ID 和临时 Token。

import AgoraRTC from "agora-rtc-sdk-ng";

let rtc = {

// For the local audio and video tracks.

localAudioTrack: null,

localVideoTrack: null,

client: null,

};

let options = {

// Pass your app ID here.

appId: "your_app_id",

// Set the channel name.

channel: "test",

// Use a temp token

token: "your_temp_token",

// Uid

uid: 123456,

};

async function startBasicLiveStreaming() {

rtc.client = AgoraRTC.createClient({mode: "live", codec: "vp8"});

window.onload = function () {

document.getElementById("host-join").onclick = async function () {

rtc.client.setClientRole("host");

await rtc.client.join(options.appId, options.channel, options.token, options.uid);

// Create an audio track from the audio sampled by a microphone.

rtc.localAudioTrack = await AgoraRTC.createMicrophoneAudioTrack();

// Create a video track from the video captured by a camera.

rtc.localVideoTrack = await AgoraRTC.createCameraVideoTrack();

// Publish the local audio and video tracks to the channel.

await rtc.client.publish([rtc.localAudioTrack, rtc.localVideoTrack]);

// Dynamically create a container in the form of a DIV element for playing the local video track.

const localPlayerContainer = document.createElement("div");

// Specify the ID of the DIV container. You can use the `uid` of the remote user.

localPlayerContainer.id = options.uid;

localPlayerContainer.textContent = "Local user " + options.uid;

localPlayerContainer.style.width = "640px";

localPlayerContainer.style.height = "480px";

document.body.append(localPlayerContainer);

rtc.localVideoTrack.play(localPlayerContainer);

console.log("publish success!");

};

document.getElementById("audience-join").onclick = async function () {

rtc.client.setClientRole("audience");

await rtc.client.join(options.appId, options.channel, options.token, options.uid);

rtc.client.on("user-published", async (user, mediaType) => {

// Subscribe to a remote user.

await rtc.client.subscribe(user, mediaType);

console.log("subscribe success");

// If the subscribed track is video.

if (mediaType === "video") {

// Get `RemoteVideoTrack` in the `user` object.

const remoteVideoTrack = user.videoTrack;

// Dynamically create a container in the form of a DIV element for playing the remote video track.

const remotePlayerContainer = document.createElement("div");

// Specify the ID of the DIV container. You can use the `uid` of the remote user.

remotePlayerContainer.id = user.uid.toString();

remotePlayerContainer.textContent = "Remote user " + user.uid.toString();

remotePlayerContainer.style.width = "640px";

remotePlayerContainer.style.height = "480px";

document.body.append(remotePlayerContainer);

// Play the remote video track.

// Pass the DIV container and the SDK dynamically creates a player in the container for playing the remote video track.

remoteVideoTrack.play(remotePlayerContainer);

}

// If the subscribed track is audio.

if (mediaType === "audio") {

// Get `RemoteAudioTrack` in the `user` object.

const remoteAudioTrack = user.audioTrack;

// Play the audio track. No need to pass any DOM element.

remoteAudioTrack.play();

}

});

rtc.client.on("user-unpublished", user => {

// Get the dynamically created DIV container.

const remotePlayerContainer = document.getElementById(user.uid);

// Destroy the container.

remotePlayerContainer.remove();

});

};

document.getElementById("leave").onclick = async function () {

// Close all the local tracks.

rtc.localAudioTrack.close();

rtc.localVideoTrack.close();

// Traverse all remote users.

rtc.client.remoteUsers.forEach(user => {

// Destroy the dynamically created DIV containers.

const playerContainer = document.getElementById(user.uid);

playerContainer && playerContainer.remove();

});

// Leave the channel.

await rtc.client.leave();

};

};

}

startBasicLiveStreaming();

下图展示了实现音视频互动的 API 调用流程。注意图中的方法是对不同的对象调用的。

你可以直接将以下代码复制到 basicEngagement.js 文件中来快速体验视频通话,注意将 appID 和 token 替换为你自己的 App ID 和临时 Token。

import AgoraRTC from "agora-rtc-sdk-ng";

let rtc = {

localAudioTrack: null,

localVideoTrack: null,

client: null,

};

let options = {

// Pass your App ID here.

appId: "Your App ID",

// Set the channel name.

channel: "test",

// Pass your temp token here.

token: "Your temp token",

// Set the user ID.

uid: 123456,

};

async function startBasicCall() {

// Create an AgoraRTCClient object.

rtc.client = AgoraRTC.createClient({mode: "rtc", codec: "vp8"});

// Listen for the "user-published" event, from which you can get an AgoraRTCRemoteUser object.

rtc.client.on("user-published", async (user, mediaType) => {

// Subscribe to the remote user when the SDK triggers the "user-published" event

await rtc.client.subscribe(user, mediaType);

console.log("subscribe success");

// If the remote user publishes a video track.

if (mediaType === "video") {

// Get the RemoteVideoTrack object in the AgoraRTCRemoteUser object.

const remoteVideoTrack = user.videoTrack;

// Dynamically create a container in the form of a DIV element for playing the remote video track.

const remotePlayerContainer = document.createElement("div");

// Specify the ID of the DIV container. You can use the uid of the remote user.

remotePlayerContainer.id = user.uid.toString();

remotePlayerContainer.textContent = "Remote user " + user.uid.toString();

remotePlayerContainer.style.width = "640px";

remotePlayerContainer.style.height = "480px";

document.body.append(remotePlayerContainer);

// Play the remote video track.

// Pass the DIV container and the SDK dynamically creates a player in the container for playing the remote video track.

remoteVideoTrack.play(remotePlayerContainer);

}

// If the remote user publishes an audio track.

if (mediaType === "audio") {

// Get the RemoteAudioTrack object in the AgoraRTCRemoteUser object.

const remoteAudioTrack = user.audioTrack;

// Play the remote audio track. No need to pass any DOM element.

remoteAudioTrack.play();

}

// Listen for the "user-unpublished" event

rtc.client.on("user-unpublished", user => {

// Get the dynamically created DIV container.

const remotePlayerContainer = document.getElementById(user.uid);

// Destroy the container.

remotePlayerContainer.remove();

});

});

window.onload = function () {

document.getElementById("join").onclick = async function () {

// Join an RTC channel.

await rtc.client.join(options.appId, options.channel, options.token, options.uid);

// Create a local audio track from the audio sampled by a microphone.

rtc.localAudioTrack = await AgoraRTC.createMicrophoneAudioTrack();

// Create a local video track from the video captured by a camera.

rtc.localVideoTrack = await AgoraRTC.createCameraVideoTrack();

// Publish the local audio and video tracks to the RTC channel.

await rtc.client.publish([rtc.localAudioTrack, rtc.localVideoTrack]);

// Dynamically create a container in the form of a DIV element for playing the local video track.

const localPlayerContainer = document.createElement("div");

// Specify the ID of the DIV container. You can use the uid of the local user.

localPlayerContainer.id = options.uid;

localPlayerContainer.textContent = "Local user " + options.uid;

localPlayerContainer.style.width = "640px";

localPlayerContainer.style.height = "480px";

document.body.append(localPlayerContainer);

// Play the local video track.

// Pass the DIV container and the SDK dynamically creates a player in the container for playing the local video track.

rtc.localVideoTrack.play(localPlayerContainer);

console.log("publish success!");

};

document.getElementById("leave").onclick = async function () {

// Destroy the local audio and video tracks.

rtc.localAudioTrack.close();

rtc.localVideoTrack.close();

// Traverse all remote users.

rtc.client.remoteUsers.forEach(user => {

// Destroy the dynamically created DIV containers.

const playerContainer = document.getElementById(user.uid);

playerContainer && playerContainer.remove();

});

// Leave the channel.

await rtc.client.leave();

};

};

}

startBasicCall();

你可以参考测试 App 章节中的步骤来运行项目和查看项目实现效果。

以下章节介绍了实现音视频互动步骤中核心 API 的调用。

导入声网组件

导入声网 AgoraRTC 组件。

import AgoraRTC from "agora-rtc-sdk-ng";

定义 App ID 和 Token

传入从声网控制台获取的 App ID、临时 Token,以及生成临时 Token 时填入的频道名,用于后续初始化引擎和加入频道。

let rtc = {

// For the local audio and video tracks.

localAudioTrack: null,

localVideoTrack: null,

client: null,

};

let options = {

// Pass your app ID here.

appId: "your_app_id",

// Set the channel name.

channel: "test",

// Use a temp token

token: "your_temp_token",

// Uid

uid: 123456,

};

初始化引擎

- 互动直播

- 视频通话

调用 createClient 方法并设置 mode 为 "live"(直播场景),创建 AgoraRTCClient 对象。

rtc.client = AgoraRTC.createClient({mode: "live", codec: "vp8"});

调用 createClient 方法并设置 mode 为 "rtc"(通话场景),创建 AgoraRTCClient 对象。

rtc.client = AgoraRTC.createClient({ mode: "rtc", codec: "vp8" });

加入频道并发布音视频轨道

- 互动直播

- 视频通话

-

调用

setClientRole方法,将角色role设为"host"(主播)或"audience"(观众)。主播可发布和订阅轨道,观众仅可订阅。 -

调用

join方法加入一个 RTC 频道,你需要在该方法中传入 App ID 、用户 ID、Token、频道名称。 -

将角色设为主播后,需要先调用

createMicrophoneAudioTrack通过麦克风采集的音频创建本地音频轨道对象,调用createCameraVideoTrack通过摄像头采集的视频创建本地视频轨道对象;然后调用publish方法,将这些本地音视频轨道对象当作参数即可将音视频发布到频道中。 -

创建一个

"div"容器用于播放本地视频轨道。

document.getElementById("host-join").onclick = async function () {

rtc.client.setClientRole("host");

await rtc.client.join(options.appId, options.channel, options.token, options.uid);

// Create an audio track from the audio sampled by a microphone.

rtc.localAudioTrack = await AgoraRTC.createMicrophoneAudioTrack();

// Create a video track from the video captured by a camera.

rtc.localVideoTrack = await AgoraRTC.createCameraVideoTrack();

// Publish the local audio and video tracks to the channel.

await rtc.client.publish([rtc.localAudioTrack, rtc.localVideoTrack]);

// Dynamically create a container in the form of a DIV element for playing the local video track.

const localPlayerContainer = document.createElement("div");

// Specify the ID of the DIV container. You can use the uid of the remote user.

localPlayerContainer.id = options.uid;

localPlayerContainer.textContent = "Local user " + options.uid;

localPlayerContainer.style.width = "640px";

localPlayerContainer.style.height = "480px";

document.body.append(localPlayerContainer);

rtc.localVideoTrack.play(localPlayerContainer);

console.log("publish success!");

};

-

调用

join方法加入一个 RTC 频道,你需要在该方法中传入 App ID 、用户 ID、Token、频道名称。 -

先调用

createMicrophoneAudioTrack通过麦克风采集的音频创建本地音频轨道对象,调用createCameraVideoTrack通过摄像头采集的视频创建本地视频轨道对象;然后调用publish方法,将这些本地音视频轨道对象当作参数即可将音视频发布到频道中。 -

创建一个

"div"容器用于播放本地视频轨道。

document.getElementById("join").onclick = async function () {

// Join an RTC channel.

await rtc.client.join(options.appId, options.channel, options.token, options.uid);

// Create a local audio track from the audio sampled by a microphone.

rtc.localAudioTrack = await AgoraRTC.createMicrophoneAudioTrack();

// Create a local video track from the video captured by a camera.

rtc.localVideoTrack = await AgoraRTC.createCameraVideoTrack();

// Publish the local audio and video tracks to the RTC channel.

await rtc.client.publish([rtc.localAudioTrack, rtc.localVideoTrack]);

// Dynamically create a container in the form of a DIV element for playing the local video track.

const localPlayerContainer = document.createElement("div");

// Specify the ID of the DIV container. You can use the UID of the local user.

localPlayerContainer.id = options.uid;

localPlayerContainer.textContent = "Local user " + options.uid;

localPlayerContainer.style.width = "640px";

localPlayerContainer.style.height = "480px";

document.body.append(localPlayerContainer);

// Play the local video track.

// Pass the DIV container and the SDK dynamically creates a player in the container for playing the local video track.

rtc.localVideoTrack.play(localPlayerContainer);

console.log("publish success!");

};

订阅并播放远端音视频轨道

SDK 在监听到 "user-published" 和 "user-unpublished" 事件时会触发不同的回调逻辑。

user-published:当用户在频道中发布媒体轨道时触发。在该事件回调函数中,你可以获取到远端用户AgoraRTCRemoteUser对象;然后可以调用subscribe方法来订阅远端用户的音视频轨道,并使用play方法播放远端用户的音视频流。user-unpublished:当用户在频道中取消发布媒体轨道时触发。事件触发时,SDK 可以根据用户的唯一标识符获取到相应创建的"div"容器并进行移除,从而取消对应音视频轨道的播放。

// Listen for the "user-published" event, from which you can get an AgoraRTCRemoteUser object.

rtc.client.on("user-published", async (user, mediaType) => {

// Subscribe to the remote user when the SDK triggers the "user-published" event

await rtc.client.subscribe(user, mediaType);

console.log("subscribe success");

// If the remote user publishes a video track.

if (mediaType === "video") {

// Get the RemoteVideoTrack object in the AgoraRTCRemoteUser object.

const remoteVideoTrack = user.videoTrack;

// Dynamically create a container in the form of a DIV element for playing the remote video track.

const remotePlayerContainer = document.createElement("div");

// Specify the ID of the DIV container. You can use the uid of the remote user.

remotePlayerContainer.id = user.uid.toString();

remotePlayerContainer.textContent = "Remote user " + user.uid.toString();

remotePlayerContainer.style.width = "640px";

remotePlayerContainer.style.height = "480px";

document.body.append(remotePlayerContainer);

// Play the remote video track.

// Pass the DIV container and the SDK dynamically creates a player in the container for playing the remote video track.

remoteVideoTrack.play(remotePlayerContainer);

}

// If the remote user publishes an audio track.

if (mediaType === "audio") {

// Get the RemoteAudioTrack object in the AgoraRTCRemoteUser object.

const remoteAudioTrack = user.audioTrack;

// Play the remote audio track. No need to pass any DOM element.

remoteAudioTrack.play();

}

// Listen for the "user-unpublished" event

rtc.client.on("user-unpublished", user => {

// Get the dynamically created DIV container.

const remotePlayerContainer = document.getElementById(user.uid);

// Destroy the container.

remotePlayerContainer.remove();

});

});

离开频道

调用 leave 方法离开频道,结束音视频互动。

document.getElementById("leave").onclick = async function () {

// Destroy the local audio and video tracks.

rtc.localAudioTrack.close();

rtc.localVideoTrack.close();

rtc.client.remoteUsers.forEach(user => {

// Destroy the dynamically created DIV containers.

const playerContainer = document.getElementById(user.uid);

playerContainer && playerContainer.remove();

});

await rtc.client.leave();

};

测试 App

本文使用 webpack 打包项目,使用 webpack-dev-server 运行项目。

运行步骤

参考以下步骤来测试你的 App:

-

在

package.json的dependencies中添加webpack-cli和webpack-dev-server字段中,在scripts字段中添加build和start:dev字段。JSON{

"name": "agora_web_quickstart",

"version": "1.0.0",

"description": "",

"main": "basicEngagement.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1",

"build": "webpack --config webpack.config.js",

"start:dev": "webpack serve --open --config webpack.config.js"

},

"dependencies": {

"agora-rtc-sdk-ng": "latest",

"webpack": "5.28.0",

"webpack-dev-server": "3.11.2",

"webpack-cli": "4.10.0"

},

"author": "",

"license": "ISC"

} -

在项目目录中创建一个名为

webpack.config.js的文件,将以下代码复制到webpack.config.js配置 webpack:JavaScriptconst path = require("path");

module.exports = {

entry: "./basicEngagement.js",

output: {

filename: "bundle.js",

path: path.resolve(__dirname, "./dist"),

},

devServer: {

compress: true,

port: 9000,

},

}; -

运行下列命令安装依赖:

Shellnpm install -

运行下列命令通过 webpack 编译项目:

Shell# Use webpack to package the project

npm run build -

通过 webpack-dev-server 运行项目:

Shellnpm run start:dev

- 在本地服务器 (localhost) 运行 Web 应用仅作为测试用途。部署生产环境时,请确保使用 HTTPS 协议。

- 由于浏览器的安全策略对除 127.0.0.1 以外的 HTTP 地址作了限制,声网 Web SDK 仅支持 HTTPS 协议、 http://localhost(http://127.0.0.1)。 请勿使用 HTTP 协议在 http://localhost(http://127.0.0.1) 之外访问你的项目。

预期效果

- 互动直播

- 视频通话

你的浏览器会自动打开以下页面:

点击 Join as host 以主播角色加入频道。你还可以邀请朋友克隆 Gitee 示例项目或 Github 示例项目到本地,在浏览器中打开 src/index.html 文件,并输入相同的 App ID、频道名称和临时 Token。你的朋友以主播角色加入频道后,你们可以看到彼此,并听到彼此的声音;你的朋友以观众角色加入频道后,你们可以听到彼此的声音。

你的浏览器会自动打开以下页面:

点击 JOIN 加入频道。你还可以邀请朋友克隆 Gitee 示例项目或 Github 示例项目到本地,在浏览器中打开 src/index.html 文件,并输入相同的 App ID、频道名称和临时 Token。你的朋友加入频道后,你们可以看到彼此,并听到彼此的声音。

后续步骤

在完成音视频互动后,你可以阅读以下文档进一步了解:

- 本文的示例使用了临时 Token 加入频道。在正式生产环境中,为保障通信安全,你需要在自己的 App 服务端生成 Token。详见使用 Token 鉴权。

- 如果你想要实现极速直播场景,可以在互动直播的基础上,通过修改观众端的延时级别为低延时实现。详见实现极速直播。

相关信息

本节提供了额外的信息供参考。

示例项目

声网提供了开源的示例项目供你参考,你可以前往下载或查看其中的源代码。

其它集成方式

使用 npm 集成 Web SDK 还支持开启 Tree shaking 来减小集成 SDK 后的 App 体积,详见使用 Tree shaking。

除使用 npm 获取 Web SDK 之外,你还可以使用以下方法获取 SDK:

-

在项目 HTML 文件中,添加如下代码,使用 CDN 方法获取 SDK:

html<script src="https://download.agora.io/sdk/release/AgoraRTC_N-4.21.0.js"></script> -

下载声网 Web SDK 4.x 版本 SDK 包至本地,将 SDK 包中的

.js文件保存到项目文件所在的目录下,然后在项目 HTML 文件中添加如下代码:html<script src="./AgoraRTC_N-4.21.0.js"></script>信息在以上方法中,SDK 都会在全局导出一个

AgoraRTC对象,直接访问这个对象即可操作 SDK。

常见问题

为什么在本地运行快速开始项目时会报错 digital envelope routines::unsupported?

本文中的快速开始项目通过 webpack 打包并在本地运行。由于 Node.js 16 及以上版本更改了对 OpenSSL 的依赖(详见 node issue),影响了项目中本地开发环境的依赖(详见 webpack issue),运行项目会发生错误。解决方案如下:

- (推荐)运行如下命令,设置临时的环境变量:

Shell

export NODE_OPTIONS=--openssl-legacy-provider - 暂时换用低版本的 Node.js。

然后再次尝试运行项目。